3D Production Pipeline

MDU115 Research an Development Blog

Pioneers

Ed Catmull

One of the greatest contributors to the computer graphics industry. He was a co-founder of Pixar Animation Studio and currently the president of Pixar and Walt Disney Animation Studios. He is responsible for the invention of texture mapping, anti-aliasing, subdivision surfaces and z-buffering.

|

| http://ptex.us/ptexpaper.html |

Ken Perlin

Another big contributor to 3D graphics. Ken Perlin is responsible for Perlin Noise, Hypertexture, Real-Time Character Animation and Stylus based input devices. All these things are used every day in a 3D production.

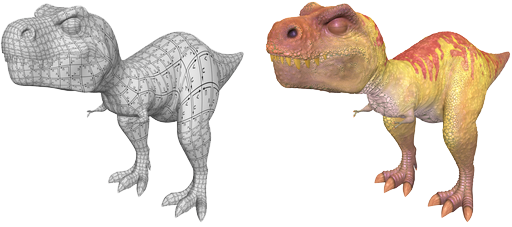

Krishnamurthy and Levoy

These two are the inventors of normal mapping. Without normal mapping video games wouldn't be able to look as detailed as they do these days. Normal mapping allows low polygon models to look like high detailed models.

|

| http://forum.toribash.com/showthread.php?t=497808 |

Inspirations

Tal Peleg http://www.tp-artwork.com/

Tal Peleg has worked on many projects as an animator. The Last of Us, Uncharted 4 and A Christmas Carol to name a few. But one project that stands out is the Dante's Inferno fan fiction short Dante's Redemption. Tal's credits on this project are Director, Scene Layout, Keyframe Animator, Lighting, Compositing, Editing and Matte Painting.

Written by Blake Head